Tea-Talk | Towards Efficient Representation and Future Context Transformers for Goal Conditioned Planning

Abstract

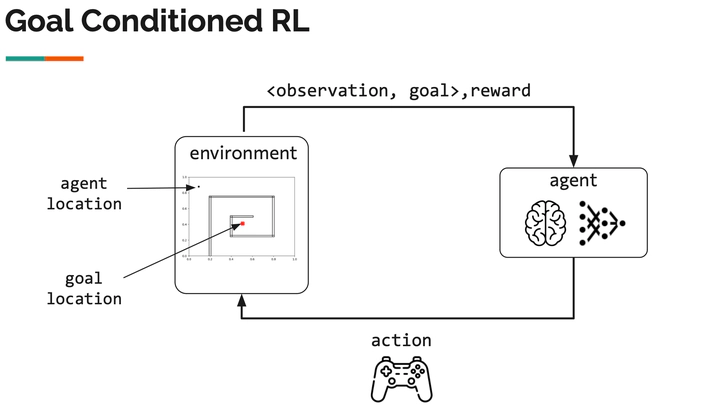

Learning task-agnostic policies are essential for creating versatile AI agents that can adapt to various tasks. In this talk, we first highlight the limitations of current latent representation mechanisms in accurately capturing the environment’s geometry, which impedes their effectiveness in learning reactive policies or using off-the-shelf planners. To address this, we introduce Plannable Continuous Latent States (PcLast), a new representation learning mechanism that combines temporal contrastive loss with multi-step inverse dynamics to create robust representations that capture the world’s geometry despite exogenous noise. Secondly, we discuss the limitations of decision transformers in goal-conditioned tasks with changing world configurations and improve decision-making by integrating implicit local dynamics information through short-term imagined future rollouts as context for the transformer. We refer to this formulation as the Future Context Decision Transformer and share its preliminary results.